Table of contents

- What is a DevOps Pipeline?

- Source Control – The Bedrock of Collaboration

- Continuous Integration – Ensuring Code Quality Continuously

- Continuous Deployment & Continuous Delivery – Seamless Transitions

- Testing in DevOps – Ensuring Code Quality and Reliability

- Infrastructure as Code (IaC) – Modern Infrastructure Management

- Monitoring & Feedback – The Heartbeat of a Healthy System

- Configuration Management

- Containerization with Docker

- Security in the DevOps Pipeline

- Artifact Storage and Management

- Rollbacks in Deployments

- Containerization with Kubernetes

- Advanced Techniques in DevOps Pipeline

- Conclusion

DevOps has revolutionized the software development and deployment processes. One of its core concepts is the pipeline, a set of automated processes designed to get code from development to production efficiently. This guide will provide a comprehensive look at DevOps pipelines, starting with the basics and diving deep into advanced concepts, techniques, and real-world examples.

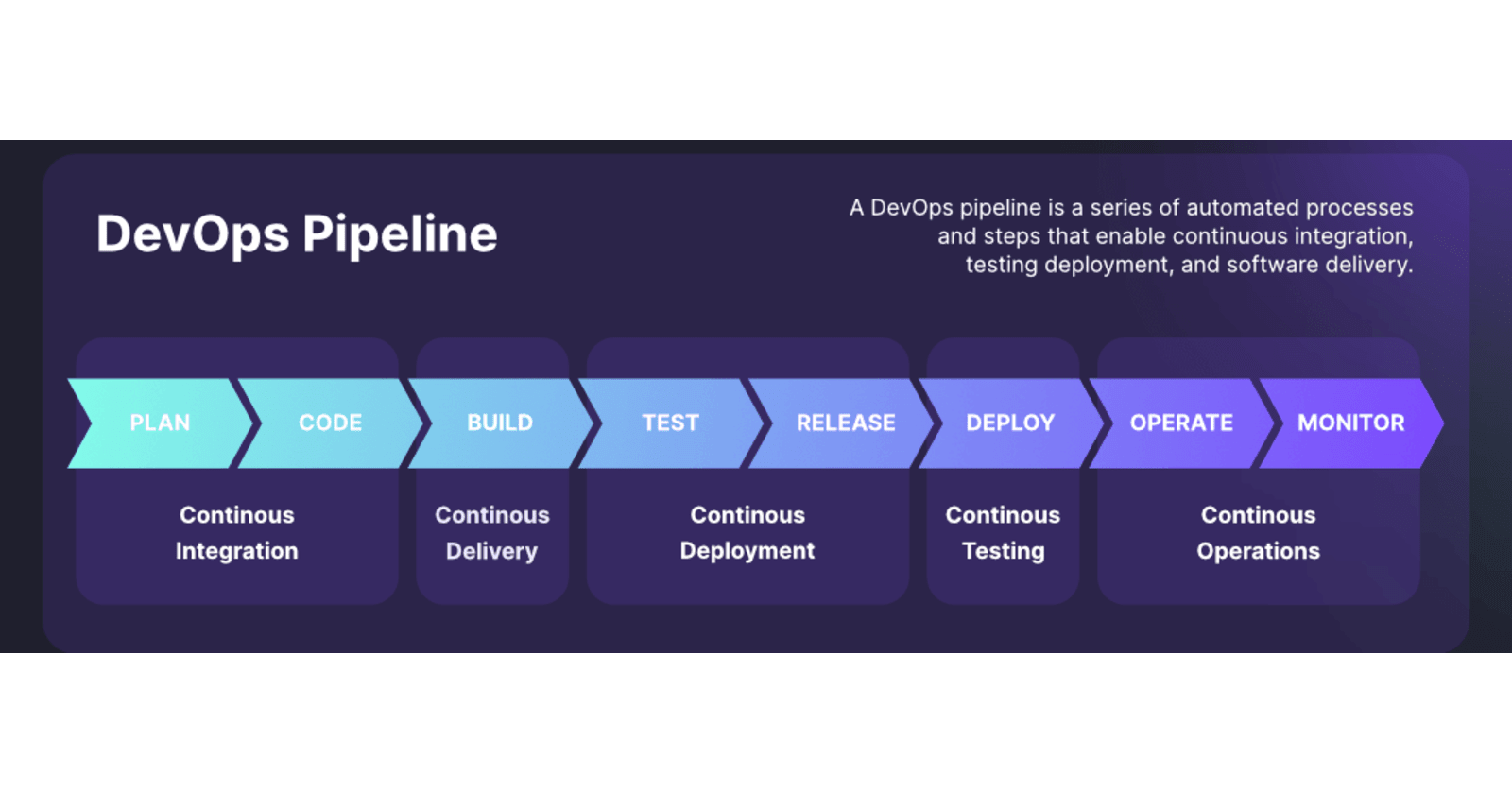

What is a DevOps Pipeline?

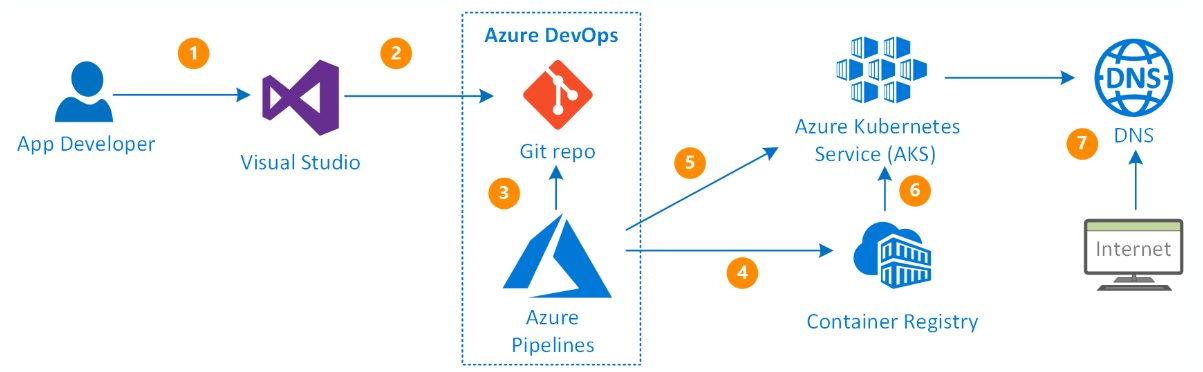

A DevOps pipeline is a set of automated processes that facilitate continuous integration (CI) and continuous deployment (CD). This means that as developers write code, it's tested, integrated, and then deployed automatically, leading to more frequent and reliable software releases.

Source Control – The Bedrock of Collaboration

Introduction to Source Control

Source control, or version control, isn't just about tracking changes — it's about fostering collaboration and ensuring traceability in software development projects.

Why Source Control is Crucial

Historical Traceability: Ever wondered who introduced a bug or why a particular change was made? Source control provides a time machine to go back and understand every change.

Parallel Development: Different teams can work on multiple features simultaneously without stepping on each other's toes, thanks to branching.

Disaster Recovery: Accidentally deleted a module? Retrieve it effortlessly with version history.

Centralized vs. Distributed Version Control

Centralized (CVCS): Like a library. One central repository. Users "checkout" code, and until they "check it in," no one else can edit that piece.

Real-life Example: In a CVCS like SVN, if two developers try to edit the same function simultaneously, the second developer will be locked out until the first one completes their change.

Distributed (DVCS): Every user has a complete local copy. They can make changes and then "push" these changes to a shared repository.

Real-life Scenario: In platforms like GitHub, two developers can edit the same file. When they try to merge their changes, Git will either automatically combine the changes or flag a merge conflict if the same lines have been edited.

Exploring Git in Depth

Git's Internal Architecture: Git doesn't just track changes — it stores "snapshots" of files. When files don't change between commits, Git simply links to the previous identical file it has already stored.

Branching and Merging: Branching is Git's killer feature. You can branch off the main codebase, make experimental changes, and then merge them back.

git checkout -b experimental-feature git commit -m "New experimental feature added" git checkout master git merge experimental-featureHandling Conflicts: Sometimes, two branches modify the same piece of code. Git will flag this, allowing manual intervention to decide which change to keep.

Popular Platforms

Beyond just Git, platforms like GitHub and GitLab provide collaboration tools — issue tracking, CI/CD integrations, code reviews, and more.

Challenges in Source Control

Complex Histories: Over time, repositories can have convoluted histories. It's crucial to maintain clean, understandable histories through practices like squashing commits or using clear commit messages.

Learning Curve: Git, in particular, has a reputation for being hard to learn for newcomers. However, its power and flexibility outweigh the initial learning hurdle.

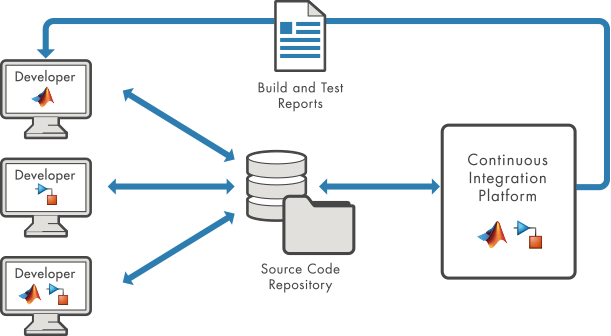

Continuous Integration – Ensuring Code Quality Continuously

The Essence of CI

CI isn't about tools or platforms — it's a philosophy. It dictates that code should be integrated continuously, meaning as often as possible, to catch defects early.

Key Principles of CI

Regular Code Integration: Merge code into the main branch frequently to detect errors ASAP.

Automate Everything: From building the application to running tests, every step should be automated.

Immediate Feedback: If something breaks, developers should be alerted immediately. Fast feedback loops lead to quicker resolutions.

CI in Action: Tools and Practices

-1.png?width=650&name=Continuous%20Integration%20Tools%20(V4)-1.png)

Jenkins: This open-source tool is the granddaddy of CI servers. With its vast plugin ecosystem, you can integrate it with practically every tool in the DevOps landscape.

Real-life Implementation: A mobile app development team sets up Jenkins to automatically build their app and run unit tests every time someone pushes code to the master branch.

Travis CI & GitHub Actions: Cloud-native solutions that integrate beautifully with repositories, especially when your infrastructure is on the cloud.

Beyond Just Building: Quality Gates

CI isn't just about compiling code. It's about ensuring quality. Integrate static code analysis tools, security vulnerability scanners, and other quality gate tools in the CI process.

Challenges in CI

Flaky Tests: Tests that sometimes pass and sometimes fail. They reduce trust in tests and can waste developer time.

Build Optimization: As codebases grow, build times can increase. It's essential to keep build times short to ensure quick feedback.

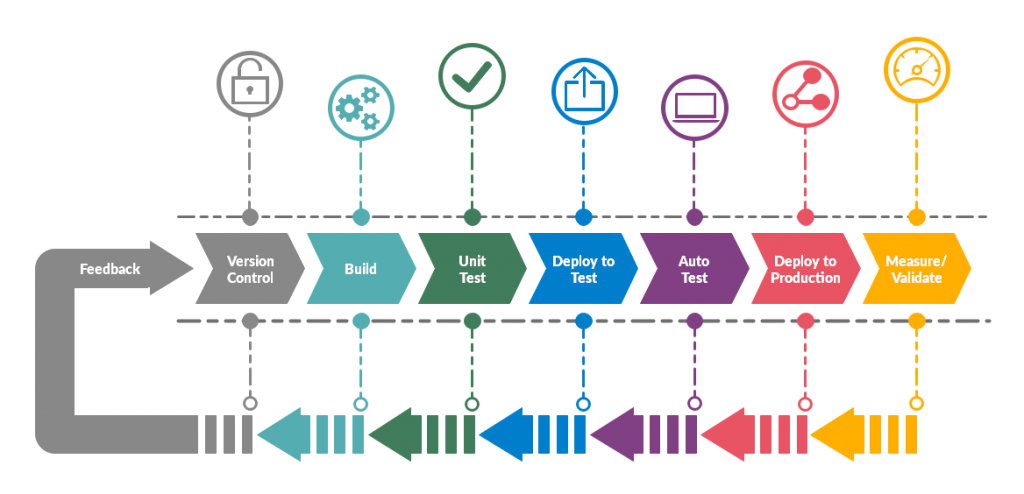

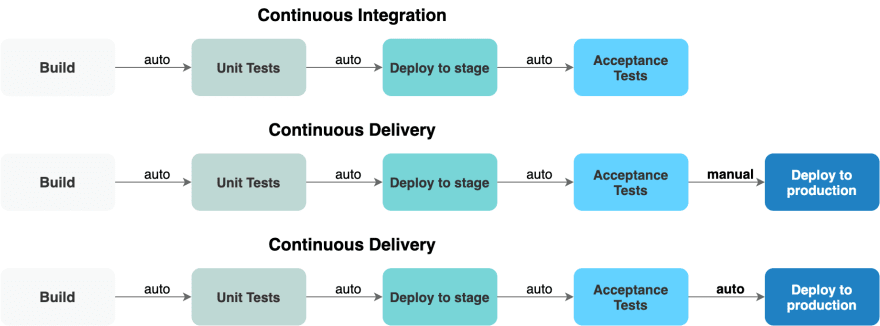

Continuous Deployment & Continuous Delivery – Seamless Transitions

What is CD?

Continuous Delivery and Continuous Deployment take the code that's been integrated in the CI process and ensures it's deliverable to end-users. While often used interchangeably, they have distinct meanings.

Continuous Delivery

It’s all about ensuring that code is always in a deployable state, even if you don't deploy every change to production. It emphasizes safety and speed of deliveries.

Continuous Deployment

This is the next step: automatically deploying every change that passes the CI tests to production. It allows for immediate feedback and faster delivery of features.

Automating Deployments with Octopus Deploy

Octopus Deploy is a deployment automation server that integrates with your existing build pipeline.

// Example C# script to push a package to Octopus Deploy using Octopus.Client; var endpoint = new OctopusServerEndpoint("http://your-octopus-domain.com", "API-YOURAPIKEY"); var repository = new OctopusRepository(endpoint); var packageToPush = new OctopusPackageVersion("YourApp", "1.0.0"); using (var fileStream = new FileStream(@"path\to\your\package.zip", FileMode.Open)) { repository.BuiltInPackageRepository.PushPackage("YourApp", fileStream, packageToPush); }Key Tools in CD

Spinnaker: An open-source multi-cloud continuous delivery platform.

Jenkins: Beyond CI, Jenkins can also handle deployment tasks, especially with the Jenkins Pipeline plugin.

ArgoCD: A Kubernetes-native tool that emphasizes declarative setups.

Real-life CD Scenario

For an online streaming service, developers integrate a new recommendation algorithm. With Continuous Deployment, once they push their code:

It's picked up by Jenkins.

Unit and Integration tests run.

The code is automatically deployed to a staging environment.

E2E tests run.

If all is green, the new feature is deployed to production — all within an hour of the initial push.

Challenges in CD

Database Migrations: How to handle evolving data structures without downtime.

Feature Flagging: Deploying code to production without releasing it to all users.

Rollbacks: When things go wrong, how quickly can you revert?

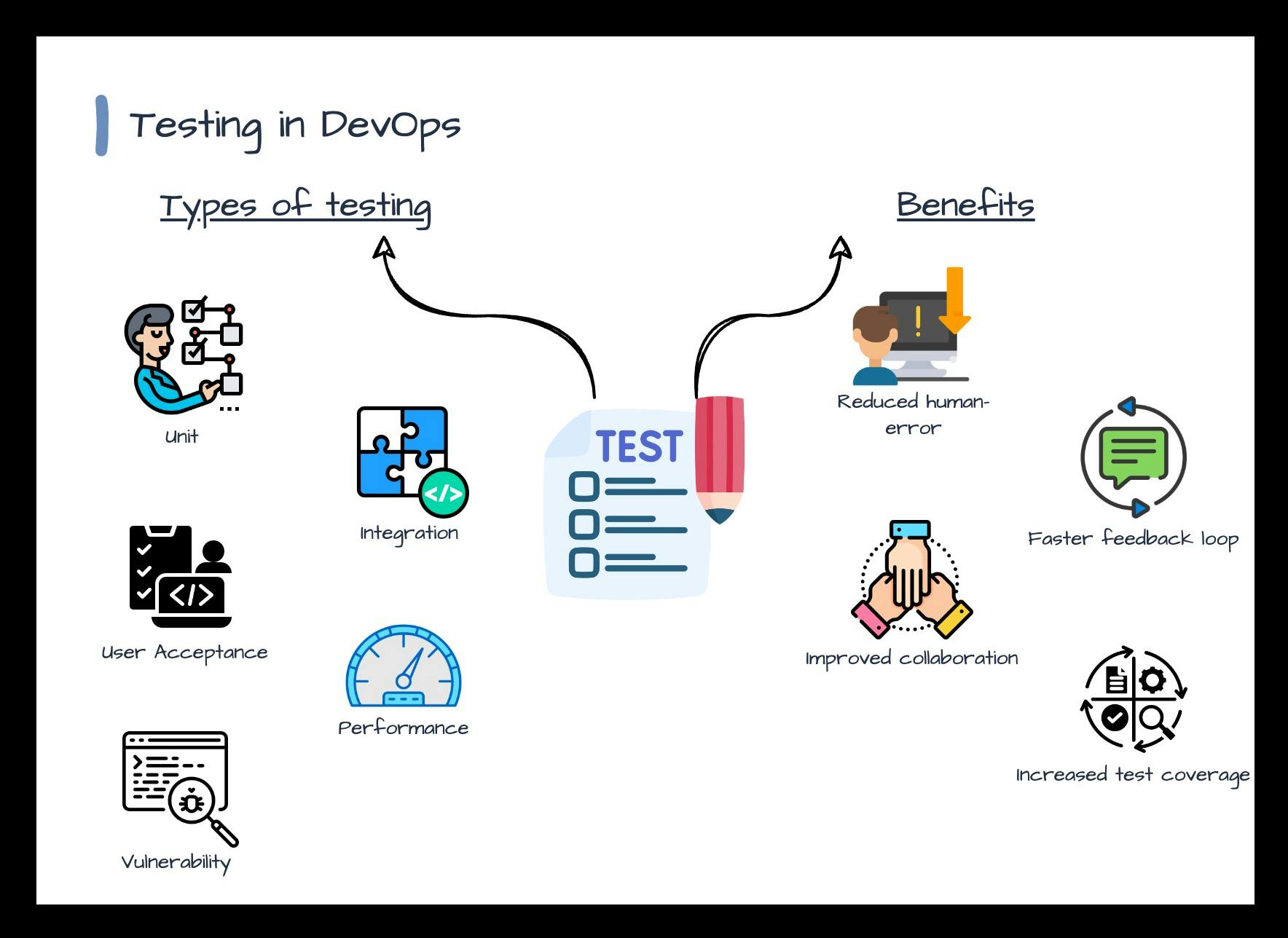

Testing in DevOps – Ensuring Code Quality and Reliability

The Importance of Testing

At the heart of DevOps is a desire to deliver software rapidly without compromising quality. Testing ensures that the code not only functions correctly but is also efficient, secure, and user-friendly.

The Testing Pyramid

This concept helps teams remember the right balance of tests:

Unit Testing: These are the foundation. Quick, isolated tests that verify individual functions or methods. Unit tests verify the smallest parts of your application in isolation (e.g., methods).

Unit Testing with NUnit:

using NUnit.Framework; [TestFixture] public class MathTests { [Test] public void Add_TwoNumbers_ReturnsSum() { var result = Math.Add(1, 2); Assert.AreEqual(3, result); } }Real-Life Scenario: A backend team developing a REST API writes unit tests for every utility function, ensuring that they handle data correctly.

Integration Testing: Validates interactions between components.

Example: Testing how two microservices communicate over a network.

Integration Testing with xUnit:

using Xunit; using System.Net.Http; public class ApiIntegrationTests { [Fact] public async Task GetEndpoint_ReturnsSuccess() { var client = new HttpClient(); var response = await client.GetAsync("https://api.yourservice.com/items"); response.EnsureSuccessStatusCode(); } }End-to-End (E2E) Testing: Validates entire application flows. These are closer to how users interact with the application. Tools like Selenium are popular for E2E tests.

End-to-End (E2E) Testing with Selenium and C#:

using OpenQA.Selenium; using OpenQA.Selenium.Chrome; using NUnit.Framework; [TestFixture] public class WebTests { IWebDriver driver; [SetUp] public void StartBrowser() { driver = new ChromeDriver(); } [Test] public void TestWebPageFunctionality() { driver.Url = "https://www.example.com/login"; driver.FindElement(By.Id("username")).SendKeys("testuser"); driver.FindElement(By.Id("password")).SendKeys("password123"); driver.FindElement(By.Id("submit")).Click(); Assert.AreEqual("Dashboard", driver.Title); } [TearDown] public void CloseBrowser() { driver.Close(); } }Real-Life Scenario: An e-commerce site runs E2E tests to simulate a user's journey from selecting a product, adding it to the cart, checking out, and receiving a confirmation.

The Shift Left Approach

Traditionally, testing was a phase that occurred after development. "Shift Left" is the practice of testing early and often in the development process. This allows for early defect detection, resulting in cheaper and quicker fixes.

Test Automation in CI/CD

Automated tests should run as part of the CI pipeline, ensuring that defects are caught before they reach production.

Challenges in Testing

Maintaining Test Suites: As software grows, tests can become outdated or redundant.

Flaky Tests: Tests that inconsistently pass or fail can erode trust in the testing process.

Environment Differences: Differences between local, staging, and production environments can lead to tests passing in one environment but failing in another.

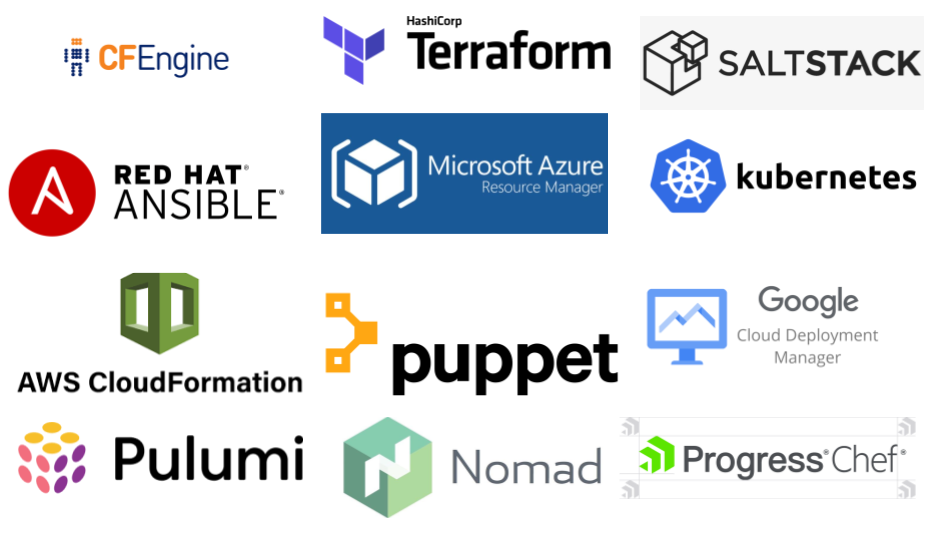

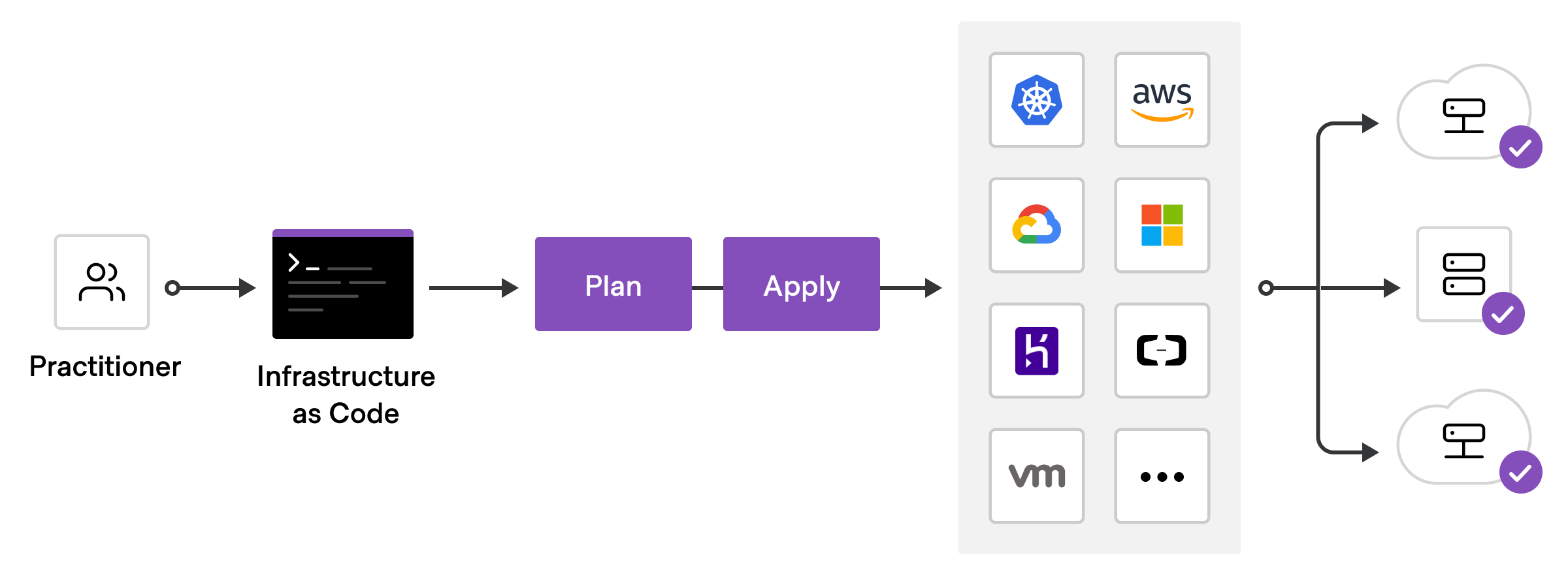

Infrastructure as Code (IaC) – Modern Infrastructure Management

The Rise of IaC

Infrastructure used to be manually set, taking days or weeks to provision servers. IaC treats infrastructure setup as code, allowing for quick, consistent, and automated setups.

Benefits of IaC

Consistency: No more "it works on my machine" issues. Every environment, from a developer's laptop to the production server, is consistent.

Version Control: Infrastructure changes are tracked, allowing for easy rollbacks and understanding of changes.

Automation: Infrastructure can be set up with a single command, dramatically speeding up the process.

Key IaC Tools

Terraform: A tool that allows for multi-cloud infrastructure setups.

resource "aws_instance" "example" { ami = "ami-0c55b159cbfafe1f0" instance_type = "t2.micro" }Ansible: A simple yet powerful automation tool for configuration management and application deployments.

- hosts: web_servers tasks: - name: Ensure Apache is installed yum: name: httpd state: presentExample: Managing Azure Infrastructure with ARM Templates and C#

Azure Resource Manager (ARM) templates allow you to define and deploy your infrastructure. Here's how you can deploy an ARM template using C#.

using Microsoft.Azure.Management.ResourceManager; using Microsoft.Azure.Management.ResourceManager.Models; using Microsoft.Rest.Azure.Authentication; var serviceClientCredentials = ApplicationTokenProvider.LoginSilentAsync( "your-domain", "your-client-id", "your-client-secret").Result; var resourceManagementClient = new ResourceManagementClient(serviceClientCredentials) { SubscriptionId = "your-subscription-id" }; var deployment = new Deployment { Properties = new DeploymentProperties { Template = File.ReadAllText(@"path\to\template.json"), Parameters = File.ReadAllText(@"path\to\params.json"), Mode = DeploymentMode.Incremental } }; resourceManagementClient.Deployments.CreateOrUpdate("your-resource-group", "your-deployment-name", deployment);

Real-life IaC Scenario

A startup's dev team wants to quickly replicate their production environment for testing. Using Terraform, they define their entire stack: servers, databases, load balancers, and network configurations. When a new member joins the team, they can set up the entire environment on their machine in minutes.

Challenges in IaC

State Management: Keeping track of the current state of infrastructure.

Drift: When changes are made outside of IaC tools.

Complexity: Large infrastructure setups can lead to long, complicated configuration files.

Monitoring & Feedback – The Heartbeat of a Healthy System

Why Monitor?

In the fast-paced world of DevOps, ensuring systems are running optimally is critical. Monitoring provides insights into the health of systems, ensuring high availability and performance.

Key Monitoring Tools

Prometheus: An open-source monitoring solution that provides a multi-dimensional data model.

Grafana: Open-source platform for monitoring and observability, often paired with Prometheus for visualizations.

ELK Stack: Elasticsearch, Logstash, and Kibana. This combination is great for logging and analyzing large volumes of data.

Example: Integrating with Azure Application Insights using C#

Azure Application Insights provides telemetry data from your applications, which helps you monitor performance, detect anomalies, and diagnose issues.using Microsoft.ApplicationInsights; using Microsoft.ApplicationInsights.Extensibility; var configuration = new TelemetryConfiguration { InstrumentationKey = "your-instrumentation-key" }; var telemetryClient = new TelemetryClient(configuration); // Track an event telemetryClient.TrackEvent("UserLoggedIn"); // Track an exception try { // Some code... } catch (Exception ex) { telemetryClient.TrackException(ex); }

Real-life Monitoring Scenario

An online gaming platform uses Prometheus and Grafana. They monitor server loads, player counts, and transaction rates. During a new game launch, they observe a spike in server load and are quickly able to scale resources to maintain smooth gameplay.

Feedback Loops

It's not enough just to monitor. Dev teams should be alerted to anomalies. This feedback ensures quick resolutions and system reliability.

Challenges in Monitoring

Alert Fatigue: Too many non-critical alerts can lead to team ignoring important ones.

Data Overload: With so many metrics, it can be challenging to identify which are crucial.

Infrastructure Costs: High-resolution monitoring can be resource-intensive.

Configuration Management

Ensuring that all operational environments across an organization are configured in a consistent and expected manner is called Configuration Management.

Configuring an App with .NET Core Configuration API

In .NET Core, the Configuration API provides a way of fetching configuration settings from multiple sources, such as JSON files, XML files, environment variables, and command-line arguments. By using this API, developers can centralize and simplify how they access configuration data.

Example:

The following C# code fetches a connection string from a appsettings.json file:

using Microsoft.Extensions.Configuration;

using System.IO;

var builder = new ConfigurationBuilder()

.SetBasePath(Directory.GetCurrentDirectory())

.AddJsonFile("appsettings.json");

IConfiguration config = builder.Build();

Console.WriteLine(config["ConnectionStrings:DefaultConnection"]);

Containerization with Docker

Containerization involves packaging an application and its dependencies into a "container". This ensures that the application works uniformly across different environments.

Building and Running a Docker Container in C# using Docker.DotNet

Docker.DotNet allows developers to interact with Docker, providing a method to programmatically manage containers.

Example:

In this C# code, we list available images, create a new container, and then start it using Docker.DotNet:

using Docker.DotNet;

using Docker.DotNet.Models;

using System.Collections.Generic;

var configuration = new DockerClientConfiguration(new Uri("unix:///var/run/docker.sock"));

using var client = configuration.CreateClient();

// List images

var images = await client.Images.ListImagesAsync(new ImagesListParameters() { MatchName = "my-image" });

// Create a container

var containerConfig = new CreateContainerParameters

{

Image = "my-image:latest",

AttachStdin = false,

AttachStdout = true,

AttachStderr = true,

Tty = true,

Cmd = new List<string> { "echo", "hello world" },

OpenStdin = true,

StdinOnce = true

};

var response = await client.Containers.CreateContainerAsync(containerConfig);

// Start the container

await client.Containers.StartContainerAsync(response.ID, new ContainerStartParameters());

Security in the DevOps Pipeline

Incorporating security measures at each phase of the DevOps pipeline is critical. This concept is often referred to as "DevSecOps", integrating security practices within the DevOps process.

Securing a .NET Core App with IdentityServer

IdentityServer is an OpenID Connect and OAuth 2.0 framework for ASP.NET Core, which aids in authenticating and authorizing application users.

Example:

The following code integrates IdentityServer into a .NET Core application to handle client authentication:

using Microsoft.AspNetCore.Builder;

using Microsoft.Extensions.DependencyInjection;

using IdentityServer4.Models;

public class Startup

{

public void ConfigureServices(IServiceCollection services)

{

services.AddIdentityServer()

.AddDeveloperSigningCredential()

.AddInMemoryClients(new List<Client>

{

new Client

{

ClientId = "your-client-id",

AllowedGrantTypes = GrantTypes.ClientCredentials,

ClientSecrets = { new Secret("your-secret".Sha256()) },

AllowedScopes = { "api1" }

}

});

}

public void Configure(IApplicationBuilder app)

{

app.UseIdentityServer();

}

}

Artifact Storage and Management

In DevOps, after the build phase, the binary or source code output is stored as artifacts. Proper management ensures efficient deployment and distribution.

Using Azure Artifacts with Azure DevOps Client Libraries

Azure Artifacts allows teams to share packages, integrating directly into CI/CD pipelines. With Azure Artifacts, one can host and share NuGet, npm, and Maven packages with teams.

Example:

The following C# code lists all the feeds available in Azure Artifacts:

using Microsoft.VisualStudio.Services.Common;

using Microsoft.VisualStudio.Services.WebApi;

using Microsoft.VisualStudio.Services.Feed.WebApi;

var connection = new VssConnection(new Uri("https://dev.azure.com/your-org/"), new VssBasicCredential(string.Empty, "your-personal-access-token"));

var feedClient = connection.GetClient<FeedHttpClient>();

var feeds = await feedClient.GetFeedsAsync();

foreach (var feed in feeds)

{

Console.WriteLine($"Feed Name: {feed.Name}");

}

Rollbacks in Deployments

Rollbacks are crucial in case a deployment introduces unexpected issues. They allow systems to revert to a previous, stable state.

Automating Database Rollbacks with Entity Framework Migrations

Entity Framework allows for easy database rollbacks using migrations. By specifying a migration name, the database can be reverted to that particular state.

Example:

The following C# code demonstrates how to rollback a database to a previous migration:

using Microsoft.EntityFrameworkCore;

using Microsoft.EntityFrameworkCore.Design;

public class MyDbContextFactory : IDesignTimeDbContextFactory<MyDbContext>

{

public MyDbContext CreateDbContext(string[] args)

{

var builder = new DbContextOptionsBuilder<MyDbContext>();

builder.UseSqlServer("YourConnectionStringHere");

return new MyDbContext(builder.Options);

}

}

// Rollback

var dbContext = new MyDbContextFactory().CreateDbContext(new string[] {});

var migrator = dbContext.Database.GetService<IMigrator>();

migrator.Migrate("PreviousMigrationName");

Containerization with Kubernetes

Kubernetes (often abbreviated as K8s) is an open-source container orchestration system for automating deployment, scaling, and managing containerized applications. Unlike Docker, which focuses on single containers, Kubernetes manages clusters of containers, ensuring high availability, load balancing, and scalability.

Setting Up a Kubernetes Deployment

Deployments in Kubernetes allow you to describe the desired state for your applications and can automatically change the actual state to the desired state. A deployment defines a replica set, which in turn defines the pods, ensuring that a specified number of them are maintained.

C# Example Using KubernetesClient:

KubernetesClient is a .NET client library for Kubernetes. In this example, we create a new deployment using this library:

using k8s;

using k8s.Models;

using System;

var config = KubernetesClientConfiguration.BuildConfigFromConfigFile();

IKubernetes client = new Kubernetes(config);

var deployment = new V1Deployment

{

Metadata = new V1ObjectMeta

{

Name = "my-deployment"

},

Spec = new V1DeploymentSpec

{

Replicas = 2,

Template = new V1PodTemplateSpec

{

Metadata = new V1ObjectMeta

{

Labels = new Dictionary<string, string>

{

{ "app", "my-app" }

}

},

Spec = new V1PodSpec

{

Containers = new List<V1Container>

{

new V1Container

{

Name = "my-app-container",

Image = "my-app:latest"

}

}

}

},

Selector = new V1LabelSelector

{

MatchLabels = new Dictionary<string, string>

{

{ "app", "my-app" }

}

}

}

};

var result = client.CreateNamespacedDeployment(deployment, "default");

Console.WriteLine($"Deployment created: {result.Metadata.Name}");

Scaling Applications with Kubernetes

One of the strengths of Kubernetes is its ability to handle application scaling seamlessly. Using the deployment created above, you can easily scale the number of replicas (pods) up or down.

C# Example to Scale a Deployment:

var deployment = client.ReadNamespacedDeployment("my-deployment", "default");

deployment.Spec.Replicas = 5; // Scale to 5 replicas

var updatedDeployment = client.ReplaceNamespacedDeployment(deployment, "my-deployment", "default");

Console.WriteLine($"Deployment scaled: {updatedDeployment.Spec.Replicas} replicas");

Self-healing and Rollbacks with Kubernetes

Kubernetes constantly checks the health of nodes and pods. If a pod fails, Kubernetes recreates it, ensuring that the actual state matches the desired state. This provides a self-healing mechanism. Moreover, if a new deployment causes issues, Kubernetes allows for easy rollbacks to previous stable versions.

C# Example to Rollback a Deployment:

var rollback = new V1DeploymentRollback

{

Name = "my-deployment",

UpdatedAnnotations = new Dictionary<string, string>

{

{ "kubernetes.io/change-cause", "rollback to version 2" }

},

RollbackTo = new V1RollbackConfig

{

Revision = 2 // specify the revision number to rollback to

}

};

client.CreateNamespacedDeploymentRollback(rollback, "my-deployment", "default");

Console.WriteLine("Deployment rolled back to version 2");

Incorporating Kubernetes into the DevOps pipeline offers a robust container orchestration system that ensures applications are available, scalable, and self-healing. It complements tools like Docker, allowing for management at scale across clusters of machines.

Advanced Techniques in DevOps Pipeline

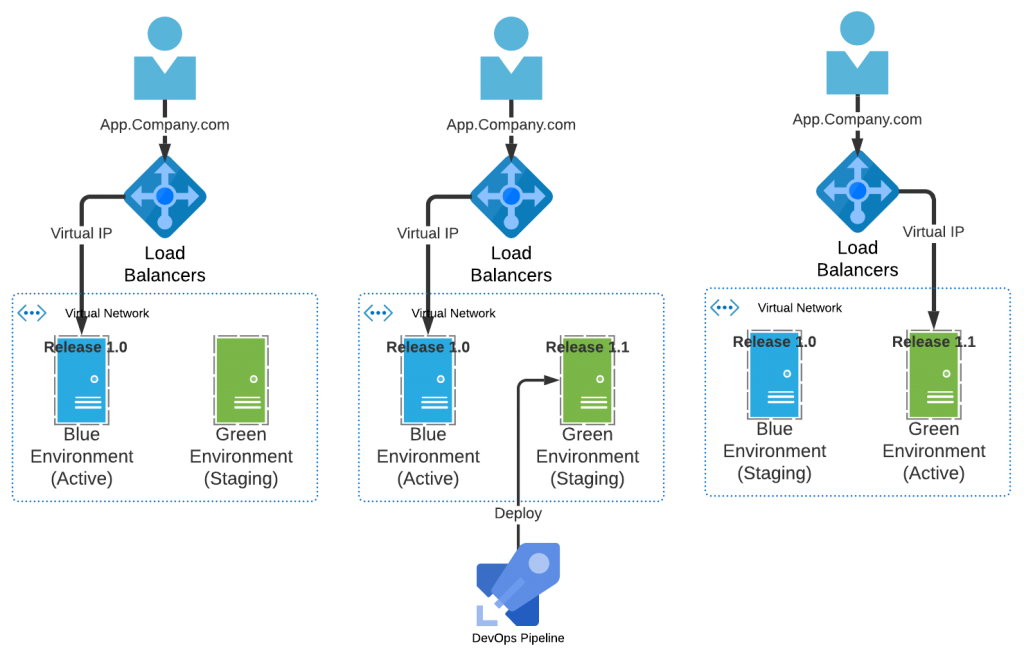

Blue-Green Deployments

Blue-Green deployments are a technique where two identical production environments are maintained: Blue (the currently live version) and Green (the next release). By keeping two environments, it becomes straightforward to switch between versions, allowing for immediate rollbacks and ensuring zero downtime during deployments.

Real-Life Example Using Azure with C#:

Imagine you have two identical web apps hosted in Azure, named "BlueApp" and "GreenApp". You can use Azure Management Libraries for .NET to switch between these environments:

using Microsoft.Azure.Management.Fluent; using Microsoft.Azure.Management.ResourceManager.Fluent; var credentials = SdkContext.AzureCredentialsFactory.FromFile("path_to_auth_file"); var azure = Azure.Configure() .WithLogLevel(HttpLoggingDelegatingHandler.Level.Basic) .Authenticate(credentials) .WithDefaultSubscription(); // Assume GreenApp is the newer version you want to switch to. var greenApp = azure.WebApps.GetByResourceGroup("resourceGroupName", "GreenApp"); var blueApp = azure.WebApps.GetByResourceGroup("resourceGroupName", "BlueApp"); // Swap slots: making GreenApp active and BlueApp passive. greenApp.Swap("production", blueApp); Console.WriteLine("Successfully switched to Green environment.");Canary Releases

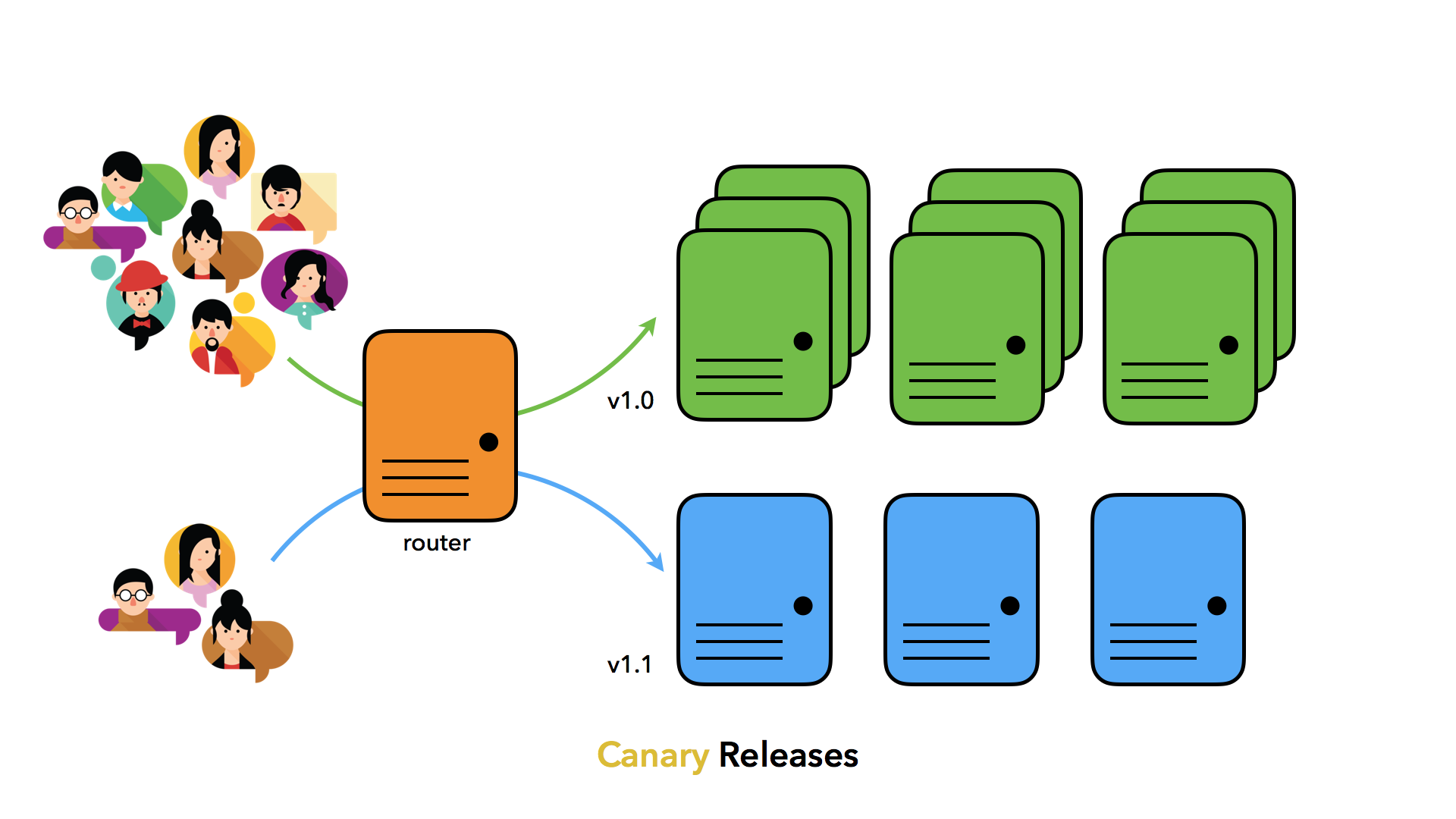

Canary releases involve rolling out a new feature or service version to a subset of users to evaluate its performance and any potential issues in a real-world environment.

Real-Life Example Using Azure Traffic Manager with C#:

Azure Traffic Manager allows for directing user traffic to specific service endpoints based on different rules. This makes it suitable for canary releases:

using Microsoft.Azure.Management.TrafficManager.Fluent; using Microsoft.Azure.Management.ResourceManager.Fluent; var credentials = SdkContext.AzureCredentialsFactory.FromFile("path_to_auth_file"); var azure = Azure.Configure() .WithLogLevel(HttpLoggingDelegatingHandler.Level.Basic) .Authenticate(credentials) .WithDefaultSubscription(); var profile = azure.TrafficManagerProfiles.GetByResourceGroup("resourceGroupName", "trafficManagerProfileName"); // Define a rule where 10% of the traffic goes to the canary endpoint. profile.WithEndpoint("canaryEndpointName") .FromExternalEndpoint() .WithSourceTrafficRegion("global") .WithWeight(10) .WithTarget("canaryEndpointFqdn") .WithRoutingPriority(1) .Attach(); profile.Update(); Console.WriteLine("Traffic routing updated for Canary release.");Feature Toggles

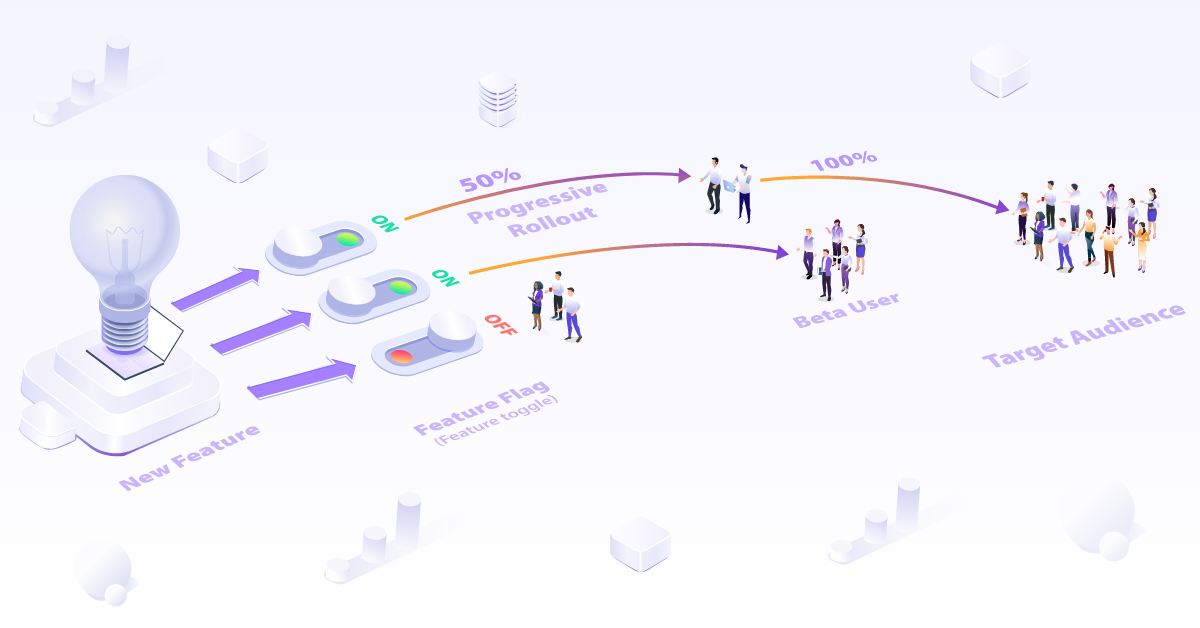

Feature toggles or feature flags allow features to be turned on or off at runtime without deploying new code. This is powerful for A/B testing, gradual rollouts, or even disabling buggy features.

Real-Life Example Using .NET Core's Configuration with C#:

.NET Core's configuration system can be utilized for feature toggles. Here's an example using

appsettings.json:appsettings.json:{ "FeatureToggles": { "NewFeature": true } }C# code to check the feature flag:

using Microsoft.Extensions.Configuration; IConfiguration config = new ConfigurationBuilder() .AddJsonFile("appsettings.json", optional: true, reloadOnChange: true) .Build(); bool isNewFeatureEnabled = config.GetValue<bool>("FeatureToggles:NewFeature"); if(isNewFeatureEnabled) { // Code for the new feature Console.WriteLine("New Feature is ON"); } else { // Old feature or alternative code Console.WriteLine("New Feature is OFF"); }

These advanced techniques empower DevOps teams to manage deployments with more flexibility, thereby minimizing risks and maximizing system availability.

Conclusion

In this comprehensive exploration of DevOps pipelines, we've embarked on a journey through the heart of modern software development. DevOps is not just a set of practices; it's a mindset that prioritizes collaboration, automation, and continuous improvement. We've delved into the essential components that make up a DevOps pipeline, from source control and continuous integration to containerization and advanced deployment strategies.

As you've discovered, DevOps pipelines empower teams to deliver software faster, more reliably, and with a laser focus on quality. They enable us to catch defects early, provide immediate feedback, and ensure the seamless delivery of features to end-users. The automation and orchestration of processes are the driving forces behind this transformation.

However, it's essential to remember that DevOps is not a one-size-fits-all solution. Your pipeline may evolve and adapt to the unique needs of your organization and projects. Embrace a culture of learning and experimentation, and continuously seek opportunities for optimization.

In your DevOps journey, remember that it's not just about the tools and technologies but the people, processes, and collaboration that truly make a difference. DevOps is a collective effort, and by fostering a culture of teamwork, you can achieve remarkable results. So, whether you're just starting or looking to refine your DevOps practices, remember that the DevOps pipeline is your highway to innovation and efficiency.

Thank you for reading this Blog. Hope you learned something new today! If you found this blog helpful, please like, share, and follow me for more blog posts like this in the future.

If you have some suggestions I am happy to learn with you.

I would love to connect with you on LinkedIn

Meet you in the next blog....till then Stay Safe ➕ Stay Healthy

#HappyLearning #devops #DevOpsPipeline #ContinuousIntegration #ContinuousDeployment #Automation #SoftwareDevelopment #DeploymentStrategies #Containerization #Kubernetes #CodeQuality #Collaboration #InfrastructureAsCode #Monitoring #FeedbackLoops #DeploymentAutomation #CI/CD #DevSecOps #ConfigurationManagement #Rollbacks #BlueGreenDeployment