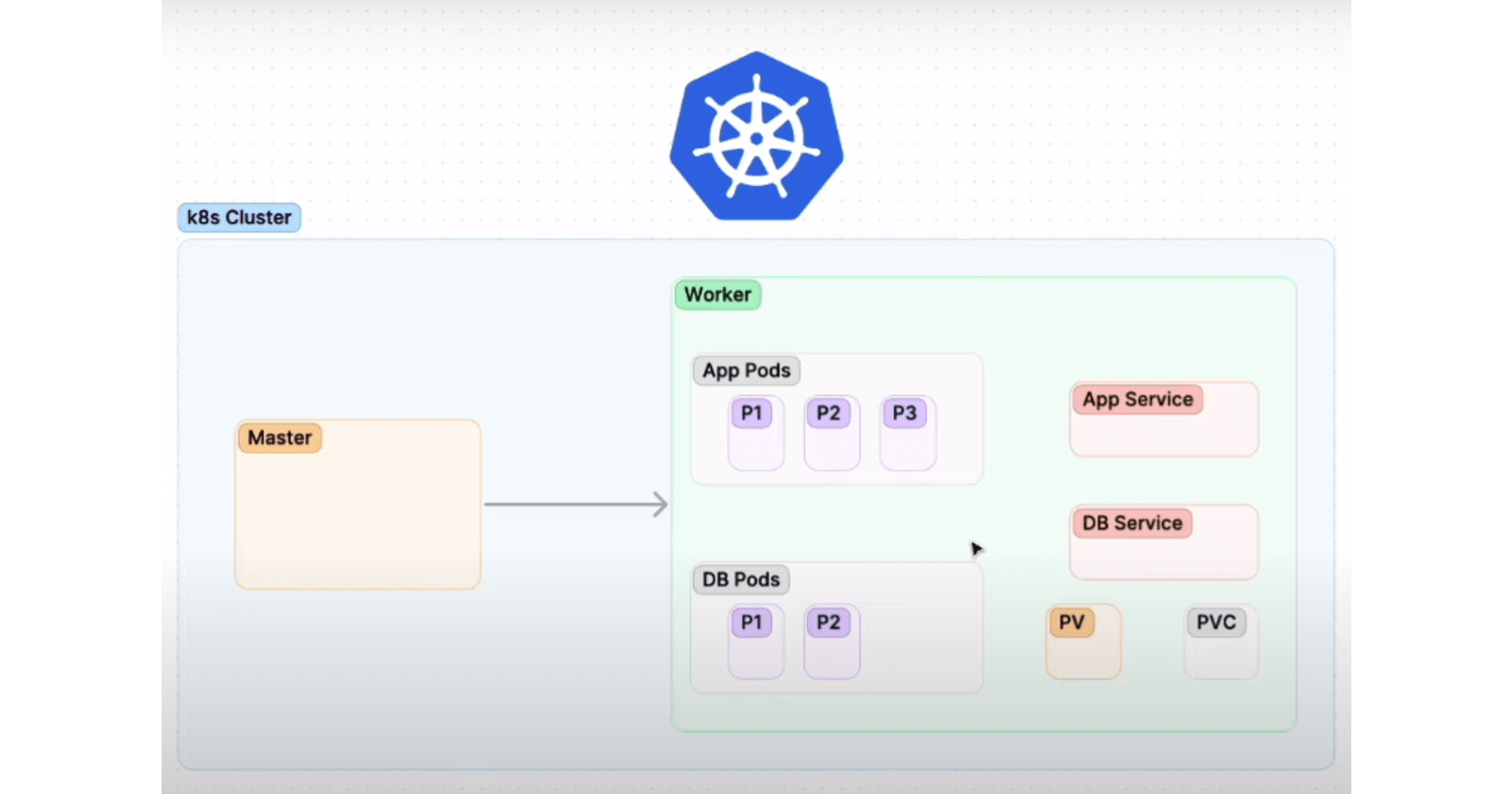

Kubernetes is a powerful container orchestration platform that allows you to deploy and manage complex applications at scale. In this tutorial, we'll walk through the process of setting up a Kubernetes cluster on AWS EC2, deploying a Flask API and a MongoDB instance on the cluster, and troubleshooting some common issues that you may encounter along the way.

Prerequisites

Before we get started, make sure you have the following tools and services installed and configured:

Two AWS EC2 instances with Ubuntu 20.04 LTS installed of instance type t2.medium

Basic knowledge of Kubernetes concepts and YAML syntax

Step 1: Setting up the Kubernetes cluster

The first step in deploying our Flask API and MongoDB on Kubernetes is to set up a Kubernetes cluster. I'll use the kubeadm tool to set up a single-node cluster with one master and one worker node.

Step 1.1: Installing Kubernetes dependencies

First, we need to install some dependencies on our EC2 instance (both master and worker):

sudo apt update -y

sudo apt install docker.io -y

sudo systemctl start docker

sudo systemctl enable docker

sudo curl -fsSLo /usr/share/keyrings/kubernetes-archive-keyring.gpg https://packages.cloud.google.com/apt/doc/apt-key.gpg

echo "deb [signed-by=/usr/share/keyrings/kubernetes-archive-keyring.gpg] https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

sudo apt update -y

sudo apt install kubeadm=1.20.0-00 kubectl=1.20.0-00 kubelet=1.20.0-00 -y

Step 1.2: Initializing the cluster

Next, we need to initialize the Kubernetes cluster on the master node:

sudo su

kubeadm init

This command will create a new Kubernetes cluster with the default configuration and networking settings. The --pod-network-cidr option specifies the IP address range that will be used for pod networking in the cluster.

Step 1.3: Configuring kubectl

After initializing the cluster, we need to configure kubectl to connect to it:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

This will copy the Kubernetes configuration file to your local machine and configure kubectl to use it.

Step 1.4: Installing Weave Net CNI plugin

Then we will deploy the Weave Net CNI plugin as a DaemonSet in your Kubernetes cluster. This will allow the Weave Net plugin to be run on every node in the cluster.

kubectl apply -f https://github.com/weaveworks/weave/releases/download/v2.8.1/weave-daemonset-k8s.yaml

Step 1.5: Generating token to join nodes

And then will generate a new bootstrap token for joining new nodes to our Kubernetes cluster

kubeadm token create --print-join-command

Step 1.6: Joining the worker node

Finally, we need to join the worker node to the cluster. On the worker node, run the command that we goy in output from command in step 1.5:

sudo su

kubeadm reset pre-flight checks

Paste the Join command on worker node and append `--v=5` at end

Here, I have used kubeadm reset pre-flight checks because it performs a series of pre-flight checks to ensure that the node is in a clean state and can be used as a worker node.

Congratulations! You now have a functioning Kubernetes cluster with one master and one worker node.

Step 2: Deploying the Flask API

Now that we have our Kubernetes cluster set up, we can deploy the Flask API on it. I'll use a Docker image that I've already created and pushed to Docker Hub.

Note: If you want to see the code and follow along with the steps, you can find everything I am doing in my GitHub repository: github.com/ambuzrnjn33/taskmaster-k8s.git

Step 2.1: Creating the deployment

To create a deployment for the Flask API, we need to define a YAML file that describes the deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: taskmaster

labels:

app: taskmaster

spec:

replicas: 1

selector:

matchLabels:

app: taskmaster

template:

metadata:

labels:

app: taskmaster

spec:

containers:

- name: taskmaster

image: ambuzrnjn33/taskmaster:latest

ports:

- containerPort: 5000

imagePullPolicy: Always

Save this file as taskmaster.yml and deploy it to the Kubernetes cluster with the following command:

kubectl apply -f taskmaster.yml

This will create a new deployment for the Flask API with one replica.

Step 2.2: Scaling the deployment

To scale the deployment to three replicas, run the following command:

kubectl scale --replicas=3 deployment/taskmaster

This will increase the number of replicas of the deployment to three.

Step 2.3: Creating the service

Next, we need to create a Kubernetes Service to expose the Flask API to the outside world:

apiVersion: v1

kind: Service

metadata:

name: taskmaster-svc

spec:

selector:

app: taskmaster

ports:

- port: 80

targetPort: 5000

nodePort: 30007

type: NodePort

Save this file as taskmaster-svc.yml and deploy it to the Kubernetes cluster with the following command:

kubectl apply -f taskmaster-svc.yml

This will create a new Kubernetes Service with a NodePort that maps port 80 to port 5000 on the Flask API container.

Step 2.4: Accessing the API

Now that we have deployed the Flask API and created a Kubernetes Service for it, we can access it from outside the cluster using the IP address of the worker node and the NodePort we specified in the taskmaster-svc.yml file.

You can access the API at http://<ip-of-worker-node>:30007/.

Step 3: Deploying MongoDB

Now that we have the Flask API up and running, we need to deploy a MongoDB instance to store the data for the API. I'll use PersistentVolumeClaim to ensure that the data is persisted even if the MongoDB container is restarted.

Step 3.1: Creating the PersistentVolume

First, we need to create a PersistentVolume to store the data for MongoDB:

apiVersion: v1

kind: PersistentVolume

metadata:

name: mongo-pv

spec:

capacity:

storage: 256Mi

accessModes:

- ReadWriteOnce

hostPath:

path: /tmp/db

Save this file as mongo-pv.yml and deploy it to the Kubernetes cluster with the following command:

kubectl apply -f mongo-pv.yml

This will create a new PersistentVolume with 256MB of storage.

Step 3.2: Creating the PersistentVolumeClaim

Next, we need to create a PersistentVolumeClaim to request storage from the PersistentVolume we just created:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mongo-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 256Mi

Save this file as mongo-pvc.yml and deploy it to the Kubernetes cluster with the following command:

kubectl apply -f mongo-pvc.yml

This will create a new PersistentVolumeClaim that requests 256MB of storage.

Step 3.3: Creating the Mongo Deployment:

Finally, we can create the Deployment for MongoDB:

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongo

labels:

app: mongo

spec:

selector:

matchLabels:

app: mongo

template:

metadata:

labels:

app: mongo

spec:

containers:

- name: mongo

image: mongo

ports:

- containerPort: 27017

volumeMounts:

- name: storage

mountPath: /data/db

volumes:

- name: storage

persistentVolumeClaim:

claimName: mongo-pvc

Save this file as mongo.yml and deploy it to the Kubernetes cluster with the following command:

kubectl apply -f mongo.yml

This will create a new Deployment with one replica that runs the official MongoDB container and mounts the persistent volume claim we created earlier.

Step 3.4: Creating the MongoDB Service

Finally, we need to create a Service to expose the MongoDB instance to the Flask API:

apiVersion: v1

kind: Service

metadata:

labels:

app: mongo

name: mongo

spec:

ports:

- port: 27017

targetPort: 27017

selector:

app: mongo

Save this file as mongo-svc.yml and deploy it to the Kubernetes cluster with the following command:

kubectl apply -f mongo-svc.yml

This will create a new Service that maps port 27017 to the MongoDB container.

Step 4: Test the Flask API

With the MongoDB database and Flask API deployed, we can now test the API to ensure that everything is working as expected. To do this, I'll use the curl command to make HTTP requests to the API.

Create a task

To create a task in the API, run the following command:

curl -H "Content-Type: application/json" -X POST -d '{"title": "Do laundry", "description": "Wash and fold clothes"}' http://<server-ip>:30007/tasks

Replace <server-ip> with the IP address of the node that the Flask API Service is running on. If the task is created successfully, you should see a JSON response containing the task details:

{"_id": {"$oid": "60924af78432c1d826ef23ec"}, "title": "Do laundry", "description": "Wash and fold clothes"}

Get all tasks

To get all tasks from the API, run the following command:

curl http://<server-ip>:30007/tasks

Replace <server-ip> with the IP address of the node that the Flask API Service is running on. If everything is working correctly, you should see a JSON response containing the task you just created:

[{"_id": {"$oid": "60924af78432c1d826ef23ec"}, "title": "Do laundry", "description": "Wash and fold clothes"}]

Get a specific task

To get a specific task by ID, run the following command:

curl http://<server-ip>:30007/tasks/<task-id>

Replace <server-ip> with the IP address of the node that the Flask API Service is running on, and <task-id> with the ID of the task you want to retrieve. If everything is working correctly, you should see a JSON response containing the details of the specified task:

{"_id": {"$oid": "60924af78432c1d826ef23ec"}, "title": "Do laundry", "description": "Wash and fold clothes"}

Update a task

To update a task by ID, run the following command:

curl -H "Content-Type: application/json" -X PUT -d '{"title": "Do laundry", "description": "Wash and fold clothes and towels"}' http://<server-ip>:30007/tasks/<task-id>

Replace <server-ip> with the IP address of the node that the Flask API Service is running on, and <task-id> with the ID of the task you want to update. If the task is updated successfully, you should see a JSON response containing the updated task details:

{"_id": {"$oid": "60924af78432c1d826ef23ec"}, "title": "Do laundry", "description": "Wash and fold clothes and towels"}

Delete a task

To delete a task by ID, run the following command:

curl -X DELETE http://<server-ip>:30007/tasks/<task-id>

Replace <server-ip> with the IP address of the node that the Flask API Service is running on, and <task-id> with the ID of the task you want to delete. If the task is deleted successfully, you should see a JSON response containing the ID of the deleted task:

{"_id": {"$oid": "60924af78432c1d826ef23ec"}}

Troubleshooting

During the deployment process, you may encounter some errors. Here are a few common errors and how to troubleshoot them:

Error: "The connection to the server localhost:8080 was refused"

This error occurs when the Kubernetes API server is not running or is not accessible from the machine you are deploying from. The API server is responsible for managing the Kubernetes cluster and exposing the Kubernetes API to client applications. To troubleshoot this error, make sure that the Kubernetes API server is running and that you have the correct URL for accessing it. You can try running the following command to check if the API server is running:

kubectl cluster-info

If the API server is not running, you may need to start it by running the following command:

sudo systemctl start kube-apiserver

Error: "pod has unbound immediate PersistentVolumeClaims"

This error occurs when a Pod is unable to find a PersistentVolume to use for storage. Kubernetes uses PersistentVolumes to provide persistent storage for containers in a cluster. When a Pod requests storage, it creates a PersistentVolumeClaim that specifies the size and access mode of the storage it needs. To troubleshoot this error, make sure that you have created a PersistentVolume and a PersistentVolumeClaim that match the storage requirements of the Pod. You can check the status of your PersistentVolumeClaims by running the following command:

kubectl get pvc

If a PersistentVolumeClaim is not bound to a PersistentVolume, you may need to create a new PersistentVolume that matches the requirements of the PersistentVolumeClaim.

Error: "ServerSelectionTimeoutError: mongo:27017"

One common DNS issue that can occur in Kubernetes is when Pods are unable to resolve the IP addresses of other Pods or services in the cluster. This can lead to errors like pymongo.errors.ServerSelectionTimeoutError when trying to connect to a MongoDB instance.

This error occurs when the Flask API is unable to connect to the MongoDB instance. MongoDB is a document-oriented database that stores data in flexible JSON-like documents. To troubleshoot this error:

Check that the affected Pods are running and are healthy. You can use the

kubectl get podscommand to see if the Pods are running. You can also check the logs for the affected containers usingkubectl logsto see if there are any issues.Check that the affected Pods are able to communicate with each other. You can use the

kubectl execcommand to shell into the affected containers and try to ping the IP addresses of other Pods or services in the cluster. If the ping is successful, it means that the Pods are able to communicate with each other.Check the DNS service in your Kubernetes cluster. Kubernetes uses a DNS service to resolve domain names to IP addresses within the cluster. If there is an issue with the DNS service, it can cause Pods and containers to be unable to resolve the IP addresses of other Pods and services in the cluster. You can use the

kubectl logscommand to view the logs for the DNS Pods and see if there are any errors or issues.Restart the DNS service. If you've identified an issue with the DNS service, you can try restarting it by deleting the

kube-dnsPods using thekubectl delete pods -n kube-system -l k8s-app=kube-dnscommand. This will trigger a restart of the DNS service, which may resolve the issue.

Conclusion

In this blog post, I have demonstrated how to deploy a Flask API and a MongoDB instance to a Kubernetes cluster. I have shown how to create a Deployment, scale it, and expose it to the outside world using a Service. I have also shown how to deploy a MongoDB instance using a PersistentVolumeClaim, and how to connect it to the Flask API using a Service. Finally, I have discussed some common errors and how to troubleshoot them.

Kubernetes provides a powerful platform for deploying and managing containerized applications at scale. By following the steps outlined in this blog post, you can easily deploy a Flask API and a MongoDB instance to a Kubernetes cluster and start building your own microservices architecture.

Thank you for reading this Blog. Hope you learned something new today! If you found this blog helpful, please like, share, and follow me for more blog posts like this in the future.

If you have some suggestions I am happy to learn with you.

I would love to connect with you on LinkedIn

Meet you in the next blog....till then Stay Safe ➕ Stay Healthy

#HappyLearning #Kubernetes #Flask #MongoDB #Microservices #Docker #DevOps #Containerization #CloudNative #Deployment #PersistentVolume #Service #API #ErrorHandling #Troubleshooting #devops #Kubeweek #KubeWeekChallenge #TrainWithShubham #KubeWeek_Challenge #kubeweekTroubleshooting