Kubernetes Architecture and Components: A Detailed Guide to Installation, Configuration, and Management

What is Kubernetes?

Kubernetes, also known as "K8s", is a powerful open-source container orchestration platform that enables the deployment, scaling, and management of containerized applications. Containerization has revolutionized the way we develop, package, and deploy software applications, and Kubernetes is at the forefront of this movement.

With Kubernetes, you can automate the deployment of your applications, scale them up or down based on demand, and manage them with ease. It provides a robust platform to manage containerized workloads and services, abstracting the underlying infrastructure and allowing developers to focus on their applications rather than the infrastructure.

One of the key features of Kubernetes is its ability to manage containerized applications across multiple hosts and clusters, providing a unified view of your entire application stack. It also offers a range of powerful features, such as automatic load balancing, self-healing, rolling updates, and service discovery, making it an ideal choice for building highly available and resilient applications.

Kubernetes also provides a rich ecosystem of tools and extensions, such as Helm, Istio, and Prometheus, which can be used to extend and enhance its capabilities. These tools can help with tasks such as managing application dependencies, monitoring and tracing, and security.

Kubernetes is built on a set of abstractions that allow developers to define their applications and services in a declarative way. Some of these abstractions include:

Pods: The smallest unit of deployment in Kubernetes. A pod is a logical host for one or more containers, and it provides an isolated environment for them to run in.

Nodes: Nodes are the underlying compute resources in a Kubernetes cluster that runs the containers. They can be physical or virtual machines and are responsible for running the container runtime, such as Docker. Nodes communicate with the Kubernetes master to receive instructions on which containers to run and report the status of the containers and their resources back to the master. Nodes can also be labelled with metadata, which can be used to schedule specific workloads onto specific nodes based on their requirements.

Services: A set of pods that work together to provide a common service. Services abstract the underlying IP addresses and ports of the pods, and they provide a stable endpoint for other services to connect to.

ReplicaSets: A way to manage the number of replicas (i.e., identical copies) of a pod that should be running at any given time. ReplicaSets provide a way to scale up or down a service as demand changes.

Deployments: A higher-level abstraction that manages ReplicaSets and provides features for rolling updates and rollbacks. Deployments provide a way to manage the entire lifecycle of an application or service.

Why this blog post?

In this blog post, we will dive deeper into the Kubernetes architecture and components, installation and configuration of Kubernetes, and the Kubernetes API server, etcd, kube-scheduler, kube-controller-manager, and kubelet. By understanding these components, you can better manage your Kubernetes cluster and ensure that your applications are running smoothly.

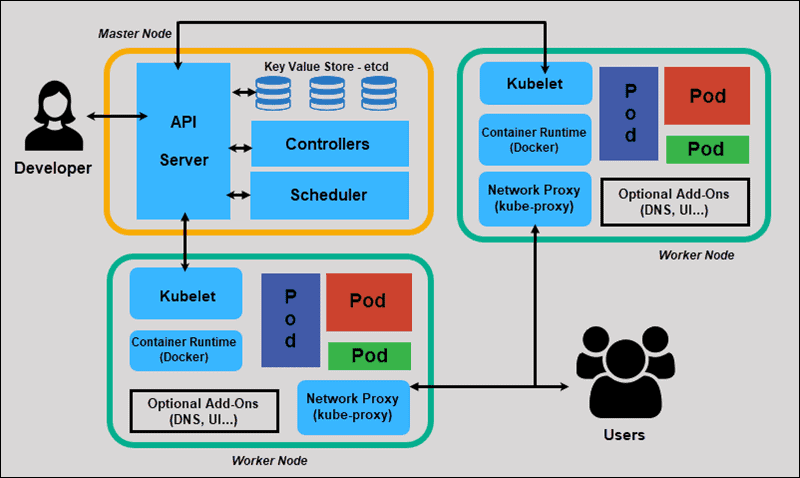

Kubernetes Architecture and Components

The Kubernetes architecture is composed of a master node and worker nodes. The master node manages the Kubernetes cluster, while the worker nodes run the containers and applications.

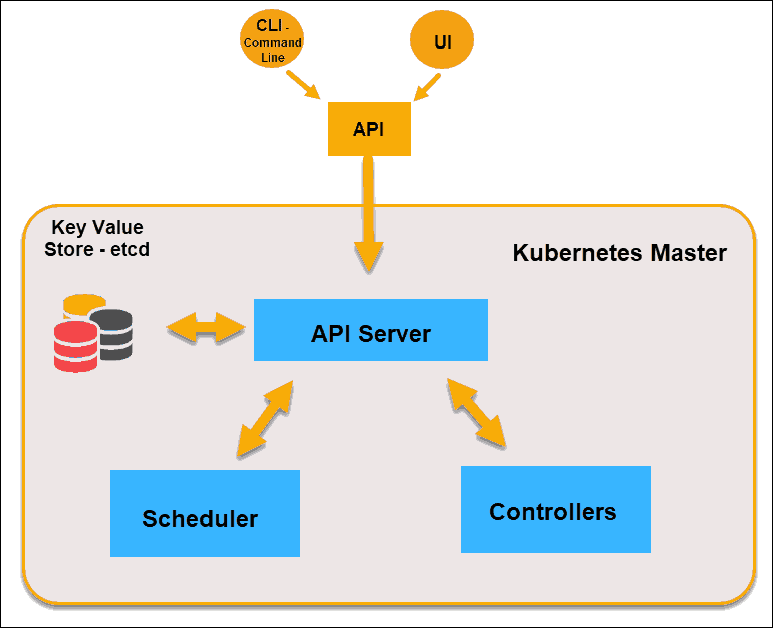

The master node has several components:

Kubernetes API Server: The API server is the central component of the Kubernetes master node. It provides a RESTful API for managing the Kubernetes objects. It accepts requests from kubectl and other Kubernetes components, processes them, and stores the state of the cluster in etcd. The API server supports several API versions, including the stable v1 API and the beta v1alpha1 and v1beta1 APIs.

#Check the status of the Kubernetes API server systemctl status kube-apiserver #Get the version of the Kubernetes API server kubectl version --short | grep Serveretcd: etcd is a distributed key-value store that stores the configuration data of the Kubernetes cluster. It stores the current state of the cluster, including the objects and their configuration, and serves as the source of truth for the cluster. etcd supports several features, such as watch, lease, and transaction.

#Check the status of the etcd cluster systemctl status etcd #Get the version of etcd etcdctl versionkube-scheduler: The scheduler is responsible for scheduling the pods to run on the worker nodes. It selects the best node to run the pod based on the available resources and the pod's requirements. The scheduler supports several scheduling policies, such as spreading, affinity, and taints and tolerations.

#Check the status of the kube-scheduler systemctl status kube-schedulerkube-controller-manager: The controller manager manages the Kubernetes controllers, which are responsible for maintaining the desired state of the Kubernetes objects. It ensures that the pods are running and that the desired number of replicas are maintained. The controller manager includes several controllers, such as the Replication Controller, ReplicaSet Controller, and Deployment Controller. The controller manager also supports several features, such as scaling, rolling updates, and canary deployments.

#Check the status of the kube-controller-manager systemctl status kube-controller-manager

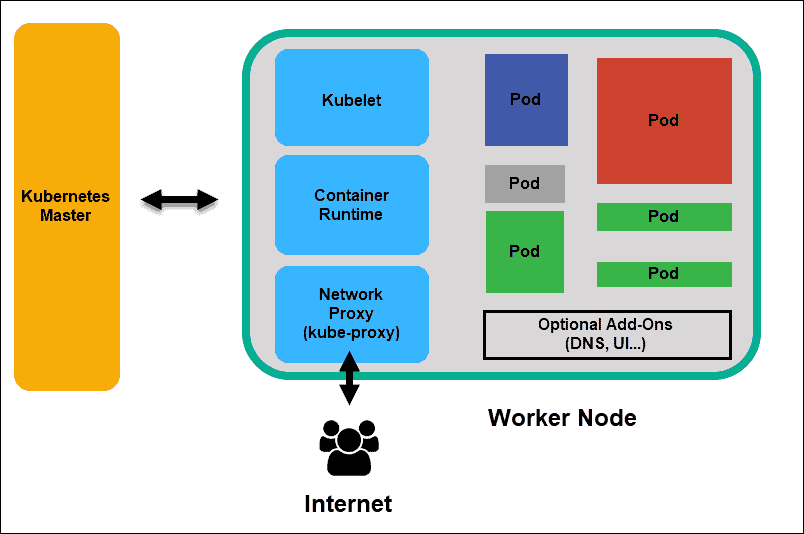

The worker nodes also have several components:

kubelet: The kubelet is responsible for managing the containers on the worker node. It ensures that the containers are running and that they are in the desired state. The kubelet communicates with the API server to get the desired state and reports the actual state. The kubelet supports several features, such as pod lifecycle management, resource management, and container security.

#Check the status of the kubelet systemctl status kubeletkube-proxy: The proxy is responsible for managing the network connectivity for the containers on the worker node. It maintains the network rules on the host and performs the necessary network address translation (NAT) to allow the containers to communicate with each other.

container runtime: The container runtime is responsible for running the containers on the worker node. Kubernetes supports several container runtimes, such as Docker, containerd, and CRI-O.

Kubernetes Installation and Configuration

To install Kubernetes, we need to install the Kubernetes components on the master node and the worker nodes. There are several ways to install Kubernetes, including using a managed Kubernetes service, such as Amazon EKS, Google Kubernetes Engine, or Microsoft Azure Kubernetes Service, or installing Kubernetes on your own infrastructure.

Installing Kubernetes on the Master Node

a. Install the Kubernetes API Server: The API server can be installed using the Kubernetes distribution package or by building it from the source code. The API server requires a valid SSL/TLS certificate for secure communication with the clients.

#Install Kubernetes API server sudo apt-get update sudo apt-get install -y kube-apiserverb. Install etcd: etcd can be installed using the Kubernetes distribution package or by building it from the source code. The etcd cluster should have an odd number of nodes (e.g., 3, 5, or 7) to ensure high availability and fault tolerance.

#Install etcd sudo apt-get update sudo apt-get install -y etcdc. Install kube-scheduler: The scheduler can be installed using the Kubernetes distribution package or by building it from the source code. The scheduler requires the kubeconfig file to communicate with the API server and access the cluster's resources.

#Install kube-scheduler sudo apt-get update sudo apt-get install -y kube-schedulerd. Install kube-controller-manager: The controller manager can be installed using the Kubernetes distribution package or by building it from the source code. The controller manager requires the kubeconfig file to communicate with the API server and access the cluster's resources.

#Install kube-controller-manager sudo apt-get update sudo apt-get install -y kube-controller-managerInstalling Kubernetes on the Worker Nodes

a. Install kubelet: The kubelet can be installed using the Kubernetes distribution package or by building it from the source code. The kubelet requires the kubeconfig file to communicate with the API server and access the cluster's resources.

#Install kubelet sudo apt-get update sudo apt-get install -y kubeletb. Install kube-proxy: The proxy can be installed using the Kubernetes distribution package or by building it from the source code. The proxy requires the kubeconfig file to communicate with the API server and access the cluster's resources.

#Install kube-proxy sudo apt-get update sudo apt-get install -y kube-proxyc. Install the container runtime: The container runtime can be installed using the Kubernetes distribution package or by building it from the source code. Kubernetes supports several container runtimes, such as Docker, containerd, and CRI-O.

#Install Docker container runtime sudo apt-get update sudo apt-get install -y docker.io

Once the Kubernetes components are installed, we need to configure the cluster. The configuration includes setting up the networking, authentication, and authorization.

Networking: Kubernetes uses a container network interface (CNI) plugin to provide networking to the containers. We need to install a CNI plugin and configure it. Kubernetes supports several CNI plugins, such as Calico, Flannel, and Weave Net.

#Install Calico CNI plugin kubectl apply -f https://docs.projectcalico.org/v3.16/manifests/calico.yamlAuthentication: Kubernetes provides several authentication mechanisms, including client certificates, bearer tokens, and basic authentication. We need to configure the authentication mechanism that best suits our needs. Kubernetes also supports external authentication providers, such as LDAP, OAuth, and OpenID Connect.

#Configure client certificate authentication mkdir -p /etc/kubernetes/pki/apiserver openssl genrsa -out /etc/kubernetes/pki/apiserver/apiserver-key.pem 2048 openssl req -new -key /etc/kubernetes/pki/apiserver/apiserver-key.pem -out /etc/kubernetes/pki/apiserver/apiserver.csr -subj "/CN=kube-apiserver" openssl x509 -req -in /etc/kubernetes/pki/apiserver/apiserver.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -out /etc/kubernetes/pki/apiserver/apiserver.crt -days 365Authorization: Kubernetes provides role-based access control (RBAC) to manage the authorization of users and services. We need to configure RBAC to control the access to the Kubernetes resources. RBAC includes several components, such as roles, role bindings, and service accounts.

#Create a role to allow a user to list pods in a namespace kubectl create role pod-reader --verb=get,list --resource=pods --namespace=default #Create a role binding to bind the role to a user or a group kubectl create rolebinding pod-reader-binding --role=pod-reader --user=user1 --namespace=default #Create a service account for a pod kubectl create serviceaccount my-service-account

Conclusion

Kubernetes provides a scalable and resilient platform to deploy and manage your applications, and it is becoming the de facto standard for container orchestration. In this blog post, we discussed the Kubernetes architecture and components, installation and configuration of Kubernetes, and the Kubernetes API server, etcd, kube-scheduler, kube-controller-manager, and kubelet. By understanding these components and using the provided commands, you can better manage your Kubernetes cluster and ensure that your applications are running smoothly.

Thank you for reading this Blog. Hope you learned something new today! If you found this blog helpful, please like, share, and follow me for more blog posts like this in the future.

If you have some suggestions I am happy to learn with you.

I would love to connect with you on LinkedIn

Meet you in the next blog....till then Stay Safe ➕ Stay Healthy

#HappyLearning #Kubernetes #ContainerOrchestration #DevOps #KubernetesArchitecture #KubernetesComponents #KubernetesInstallation #KubernetesConfiguration #KubernetesAPIServer #etcd #kubeScheduler #kubeControllerManager #kubelet #devops #Kubeweek #KubeWeekChallenge #TrainWithShubham #KubeWeek_Challenge #kubeweek_day1